We call content duplicate blocks of content that totally or partially coincide with others that are in the same domain or in any other. Contrary to the majority thinking, the duplicate detected usually occurs on the same website.

We know that Google penalizes duplicate content, whether it is internal or external. Either way it can negatively affect organic positioning.

The goal of search engines like Google is to find what the user is looking for. To be able to offer you a good range of possibilities that allows you to get it right, Google needs to know the different content that is appearing throughout the internet and identify what is of quality for the user. For this reason, Google discards duplicate content and keeps the pages that it considers original and useful for users.

Types of duplicate content

We must differentiate between two types of duplicate content.

- Internal: Duplicate content within the website itself. That is, we are talking about pages that are in the same domain. The problem with this type of content is that we don’t want to waste Google’s time on our site. Google robots have a limited crawling budget so you have to try to use that budget on optimized pages.

- External: One of the pages of our website has a full text or fragments copied from external pages. It is the content that is copied between different websites, that is, two different domains that use pages with the same content.

The moment Google’s crawler robots detect a duplicate, the search engine can penalize it in different ways.

- Filtering comments

- Directly penalizing the page

- In the event of a complaint, a Google reviewer can check and decide whether to set penalties manually.

It is practically impossible for any website to get rid of having duplicate content.

Detection method

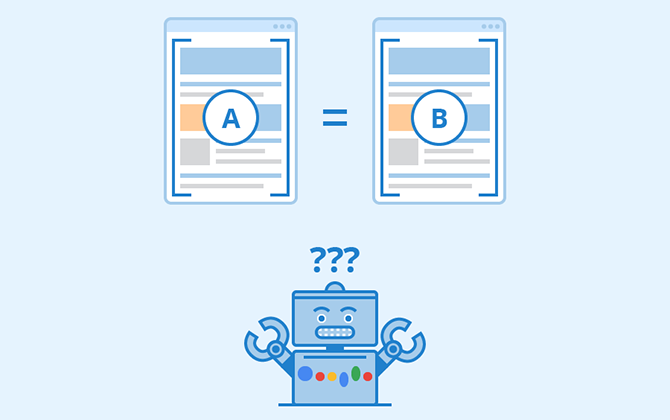

In short, what Google uses is a checksum, also called a checksum for each page of a website. The main purpose is to detect errors that may have been introduced during transmission or storage. It is a unique fingerprint of each document based on the words on the page.

In this way, when performing this checksum, if Google detects that there are two pages that have the same sum, it identifies them as clones. These checksums are used to verify the integrity of the data but are not used to verify its authenticity. Duplicate content detection and canonicalization are not the same.

What is canonicalization

In computing, canonicalization is a process of converting data that has more than one possible representation into a “standard,” “normal,” or canonical form. To understand each other better, canonicalize means choosing the best URL to display the same content. A canonical tag tells search engines which is the original URL over others so that they prioritize it and give it relevance over others to ignore. Clones are detected first, they basically group together saying that they are all clones of each other. Then you have to find the leading page among all these clones: that is canonicalization.

There are several algorithms that try to detect and then remove standard text from pages. Excluding the navigation from the calculation of this checksum and the footer we are left with what is called the centerpiece. The centerpiece is basically the core content of the pages and what you want to examine.

What signals does Google use to find the canonical URL?

What signals does Google use to find the canonical URL?

Google takes into account if the page is on an HTTPS URL, if it is included in a sitemap, or if the page is redirected to another page. This would be a very clear sign that the other page should be canonical. Among all the signals that Google uses, which are more than 20, we can highlight the following:

- Content

- Page Rank

- HTTPS

- If the page is in the sitemap file

- A redirect signal from the server

- Canonical label

What you can do to avoid duplicate content

If you want to avoid duplicate content, you can follow these tips:

- Create unique and exclusive content for your audience

- Uses Rel Canonical label

- Denies access to robots

- Create 301 redirects

What you can do if you detect duplicate content

If you discover that a web page has the same content as yours, you have several options. The most conciliatory is to contact the Webmaster of the web and tell him that he has the same content on his website as yours. Tell him that if he doesn’t delete it, he should at least include a link to your page. Be courteous and polite, usually Webmasters are not responsible for the creation of the content and do not usually agree to these practices. Another option would be to contact Google to request that content that has been plagiarized be removed from their search results.